Introduction

In today's rapidly evolving technological landscape, Artificial Intelligence continues its unrelenting pursuit towards groundbreaking discoveries. One such cutting-edge development stems from recent advancements within the realms of computer vision and LiDAR technology, specifically addressing the complex challenge of accurate 'Image-to-LiDAR' cross-domain global place recognition. This article delves deep into a remarkable innovation termed "Voxel-Cross-Pixel" or simply 'VXP', proposed in a research work available at arXiv under the handle "[VXP: Voxel-Cross-Pixel Large-Scale Image-LIDAR Place Recognition](http://arxiv.org/abs/2403.14594v1)" - a breakthrough poised to reshape how machines perceive their surroundings.

The Problem Statement - Bridging the Gap between Images & Point Clouds

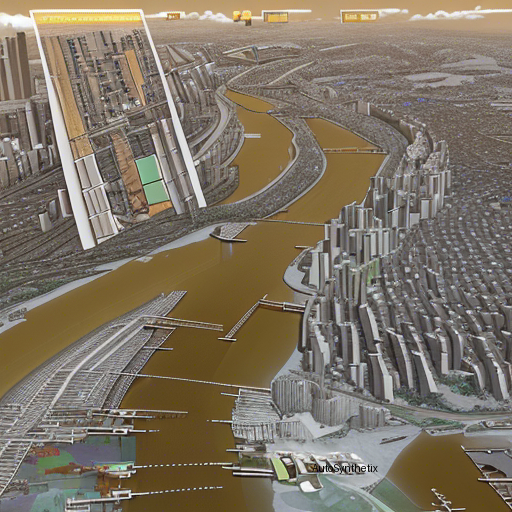

Conventional approaches often separate image-based and LiDAR-based methods when dealing with spatial contextual understanding, treating these tasks individually due to significant differences in data types involved – one being a two-dimensional representation while another consisting entirely of 3D coordinates. While successful in individual capacities, this segregation poses challenges when aiming for comprehensive global scene perception across both mediums simultaneously. Thus, the need emerges for a harmonious integration strategy capable of bridging the gap between seemingly disparate worlds of raster imagery and range-scanned environments.

Enter Voxel-Cross-Pixel - An Innovative Two-Stage Strategy

To tackle this demanding scenario head-on, researchers have introduced the innovative Voxel-Cross-Pixel (or shortly, VXP), a two-staged process meticulously crafted to establish connections between pixels in visual domain counterparts and corresponding voxels in LiDAR representations. In essence, they strive towards creating a common framework through shared semantic features spaces, enabling more effective comparisons regardless of input modality variations. Let us explore the stages further:

Stage I: Leveraging Local Correspondence Discoveries

Firstly, stage I focuses primarily upon identifying intrinsic correlations existing locally at the intersection points between images and LiDAR scans. By explicitly encouraging likeness amongst localized descriptors extracted from distinct yet interconnected segments, a solid foundation is laid out for subsequent steps.

Stage II: Enforcing Similarity Constraints Globally Across Descriptor Spaces

Having established robust microcosmic links during Stage I, the second phase now directs efforts toward reinforcing overall consistency globally throughout the entire system. Through strategic enforcement mechanisms ensuring synchronicity amid diverse descriptive models, a cohesively aligned perspective materializes irrespective of initial source discrepancies—a crucial element paving way for highly efficient cross-modality matching scenarios.

Experimental Verification - Triumph over State-Of-The-Art Algorithms

This revolutionary technique has been extensively tested against renowned benchmark datasets like Oxford RobotCar, ViViD++, and KITTI, showcasing exceptional performance, leaving contemporary state-of-the-art solutions far behind in terms of accuracy rates. These empirical evaluations serve not just as testaments but also instill much-needed confidence in the practical applicability of the presented concept.

Conclusion

With the advent of VXP, a new era dawns promising a future where seamless transitions exist even among dissimilar sensory inputs. As artificial intelligence marches forward, innovations such as this redefine boundaries previously thought insurmountable, provoking awe at human ingenuity's capacity to conquer complexity. We eagerly anticipate what horizons await exploration next in this ever-unfolding saga of AI progression.

Credit goes solely to those original pioneering minds who authored the mentioned study; herein lies merely an attempt to disseminate knowledge in an accessible format, highlighting the significance embedded within scientific excellence displayed through their work.|

Source arXiv: http://arxiv.org/abs/2403.14594v1