Introduction As technological advancement rapidly evolves across industries, the realm of agriculture isn't left behind. Embracing cutting-edge tools like generative AI models revolutionizes how we perceive gathering crucial data within agrarian environments. A prime example lies in a fascinating exploration conducted at the Center for Precision Automated Agricultural Systems, Washington State University—leveraging OpenAI's remarkable DALL·E platform in creating highly sophisticated agricultural image databases.

Background: Enter DALL·E – An Advanced Collaboration Between Visionary Technologies OpenAI's DALL·E, a large multimodal machine learning framework, stands out due to its unparalleled ability to generate vivid digital illustrations from mere text prompts or even partial pictorial cues. In tandem with another acclaimed OpenAI product, ChatGPT, handling natural language comprehension, DALL·E showcases unprecedented synergy between linguistics and vision capabilities. By combining their strengths, they create photorealistic renderings reflective of described concepts, paving new pathways in diverse applications, including agriculture.

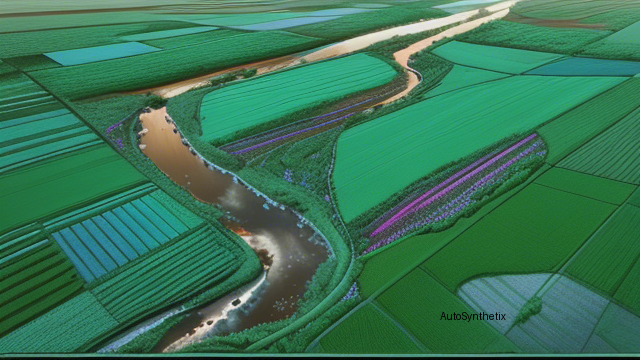

Methodology: Exploring Diverse Approaches of Image Creation in Agri-Environs Researchers undertook two primary strategies while employing DALL·E for cultivational image production: "Text-to-Image" and "Image-to-Image." They meticulously crafted six different sets of image collections centered around fruit crop ambiances. Subsequently, comparisons ensued between these artificially synthesized pictures and authentic photographs procured directly from actual farming landscapes. For assessment purposes, standard performance indicators, namely Peak Signal-to-Noise Ratio (PSNR) and Feature Similariy Index (FSIM), served as benchmarks. Additionally, subjective evaluations via qualitative analysis further illuminated the efficacy of distinctly created images.

Results: Unveiling the Power Potential of AI-Generated Farmland Imagery Through rigorous experimentation, the team observed significant differences when comparing the 'text-to-image' versus the 'image-to-image' paradigms. Notably, the latter demonstrated a striking 5.78% improvement in overall PSNR ratings, underscoring a marked enhancement in picture sharpness, lucidity, and fidelity. Although triumphant in terms of optical refinement, the same technique saw a decline in FSIM scores (-10.23%) relative to originals, implying reduced semblance concerning structure and texture characteristics inherent in genuine farm scenes. On contrasting grounds, assessments regarding the "Crops Vs Weeds" scenario yielded less substantial yet encouraging outcomes, displaying marginal gains in PSNR values (+3.77%) concomitantly accompanied by minimal reductions in FSIMs (-0.76%). Human perception corroboratively aligned with technical measurements, validating visually perceived realism disparities among differently produced photographic samples.

Conclusion: Pioneering Agricultural Visualizations Through Generative AI Advances The innovative application of DALL·E in agricultural settings serves as a testament to the boundless possibilities arising from symbiotic collaborations between state-of-the-art deep learning systems. As evident, 'text-to-image' and 'image-to-image' tactics offer varying tradeoffs; however, collectively, they propel us closer towards realizing optimized, photo-accurate virtual representations critical for fostering next-gen precision agricultural technologies. With continuous evolution in computational prowess, there's no telling what horizons AI may open up in the near future, reshaping our understanding of modern day agriculture entirely. |

Source arXiv: http://arxiv.org/abs/2307.08789v4